Deep layered machines

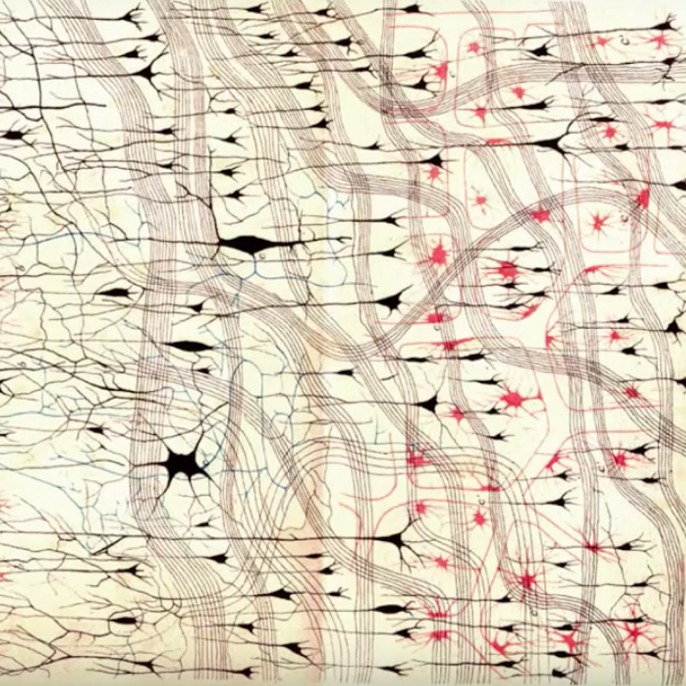

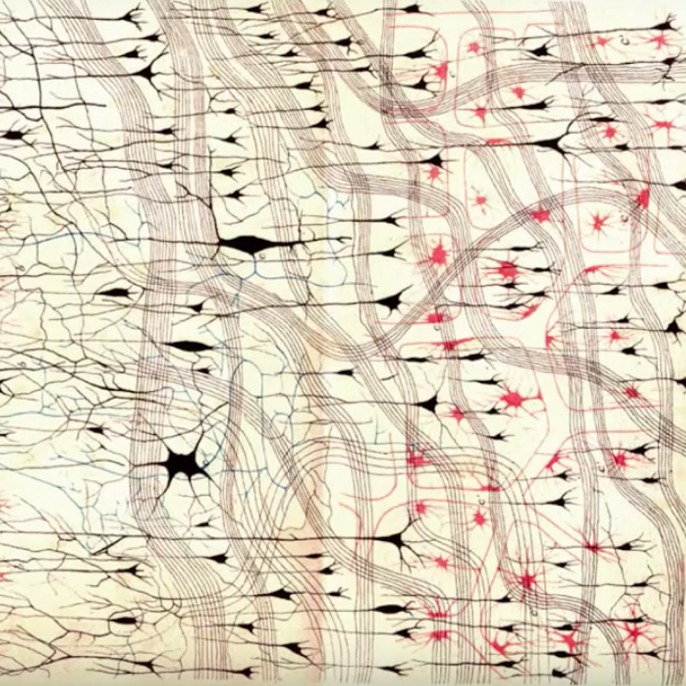

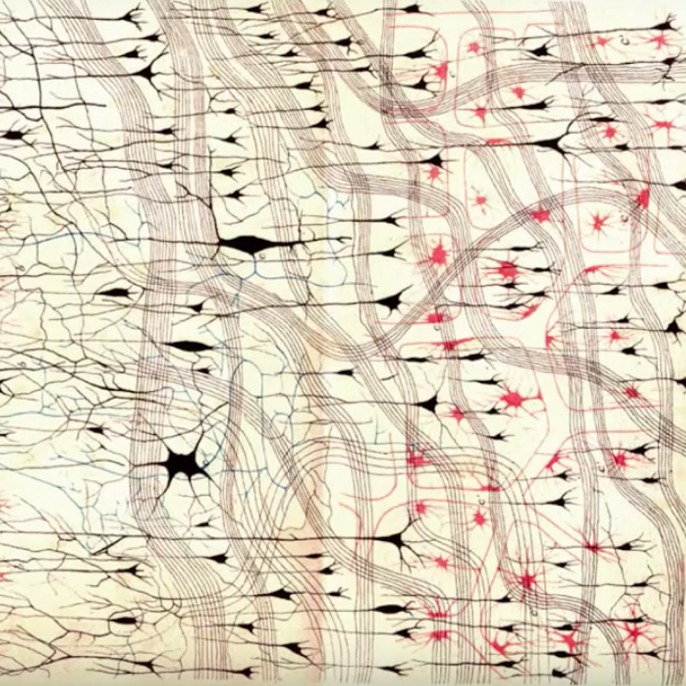

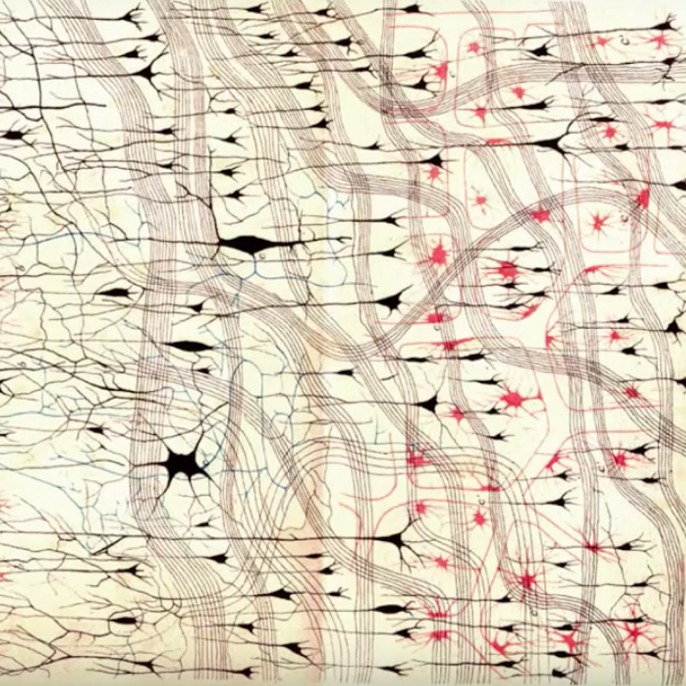

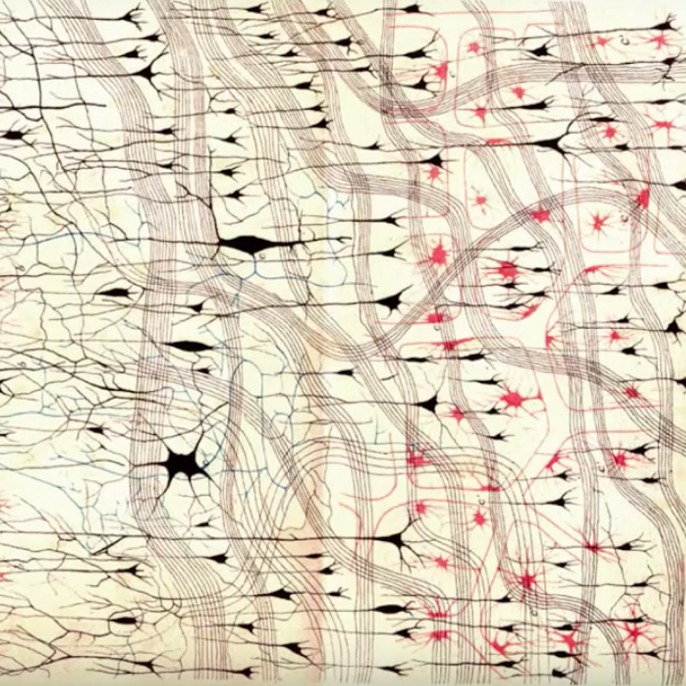

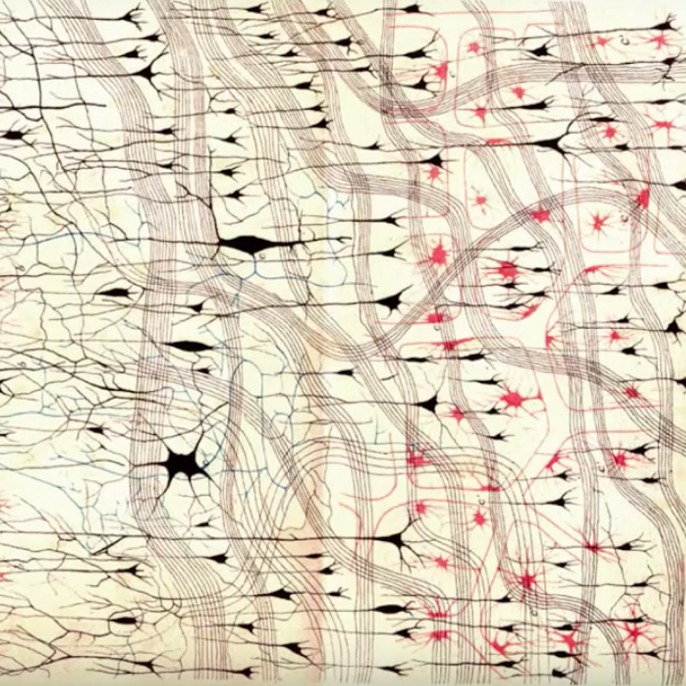

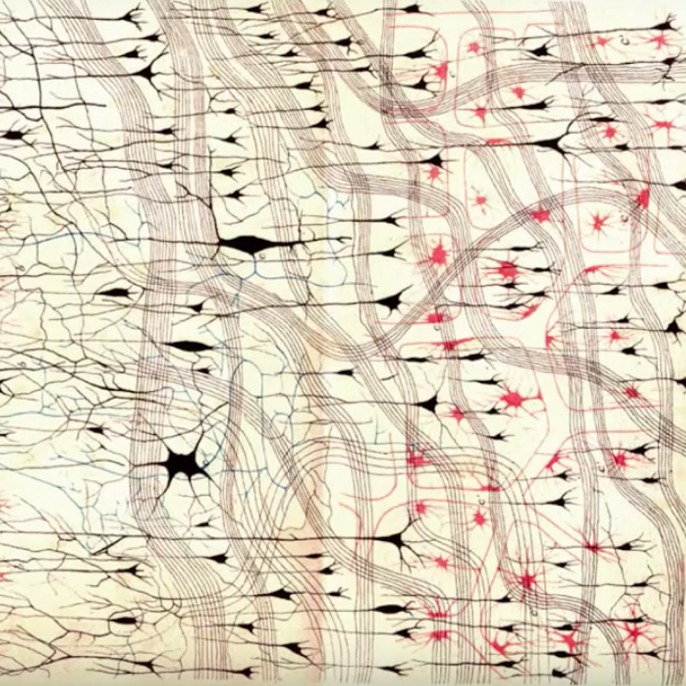

Neural networks

The ability of deep neural networks to generalize can be unraveled using path integral methods to compute their typical Boolean functions.

The space of functions computed by deep layered machines

We study the space of Boolean functions computed by random layered machines, including deep neural networks, and Boolean circuits. Investigating recurrent and layered feed-forward architectures, we find that the spaces of functions realized by both architectures are the same. We show that, depending on the initial conditions and computing elements used, the entropy of Boolean functions computed by deep layered machines is either monotonically increasing or decreasing with growing depth, and characterize the space of functions computed at the large depth limit.