Why AI works: The spontaneous emergence of a law of parsimony

2 PM, 7 Nov 2025

Our director Thomas Fink explains why the repeated application of simple logics induces a bias towards simplicity, with applications to AI.

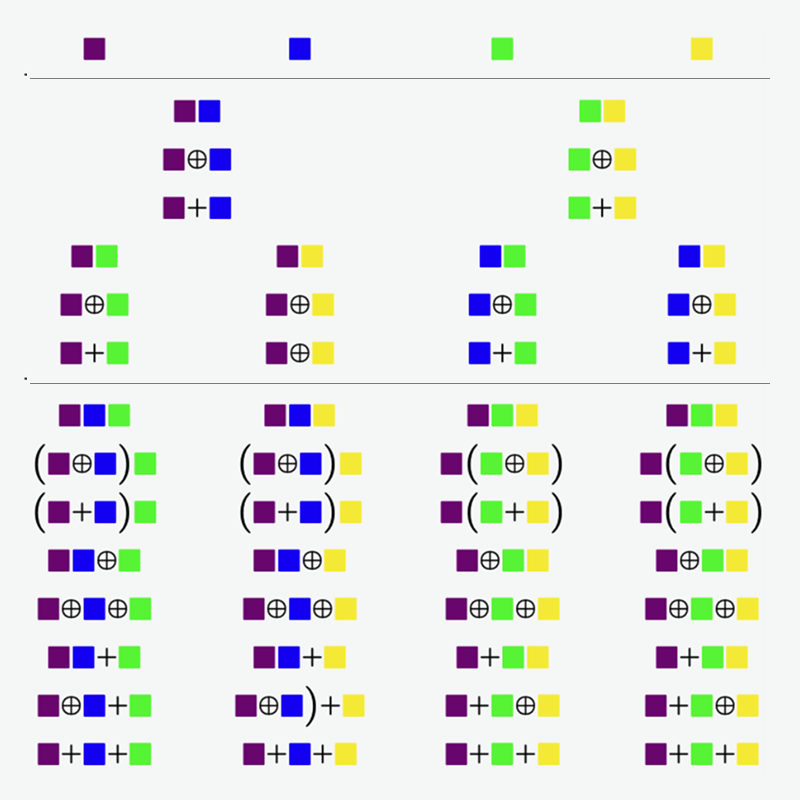

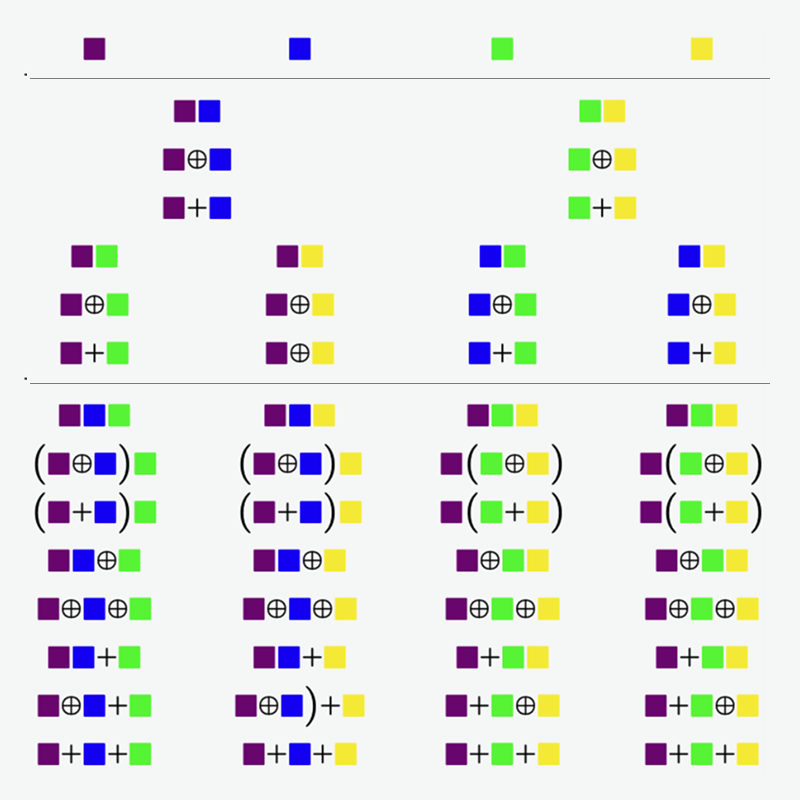

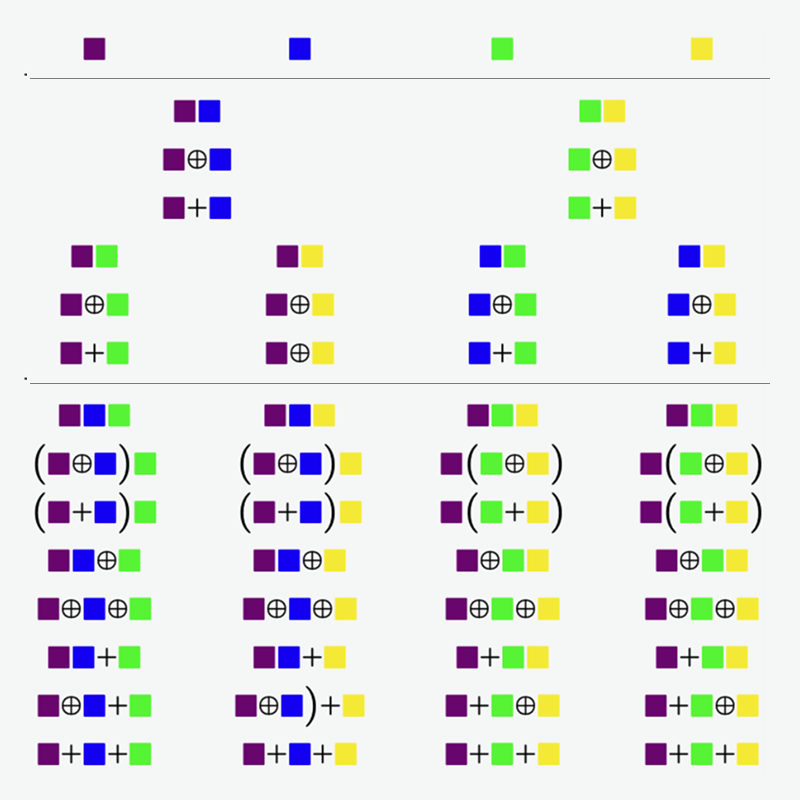

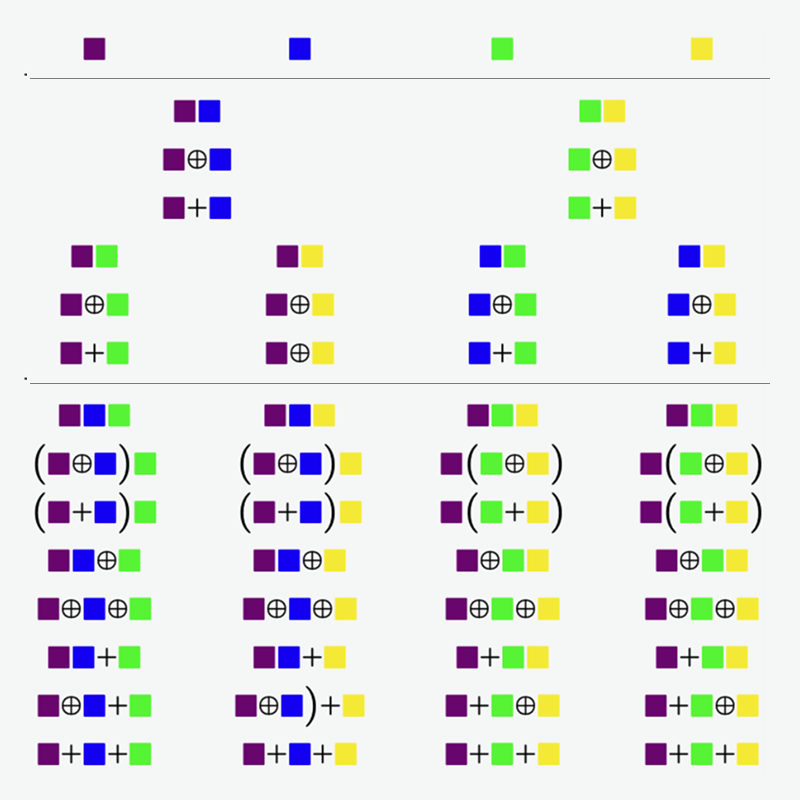

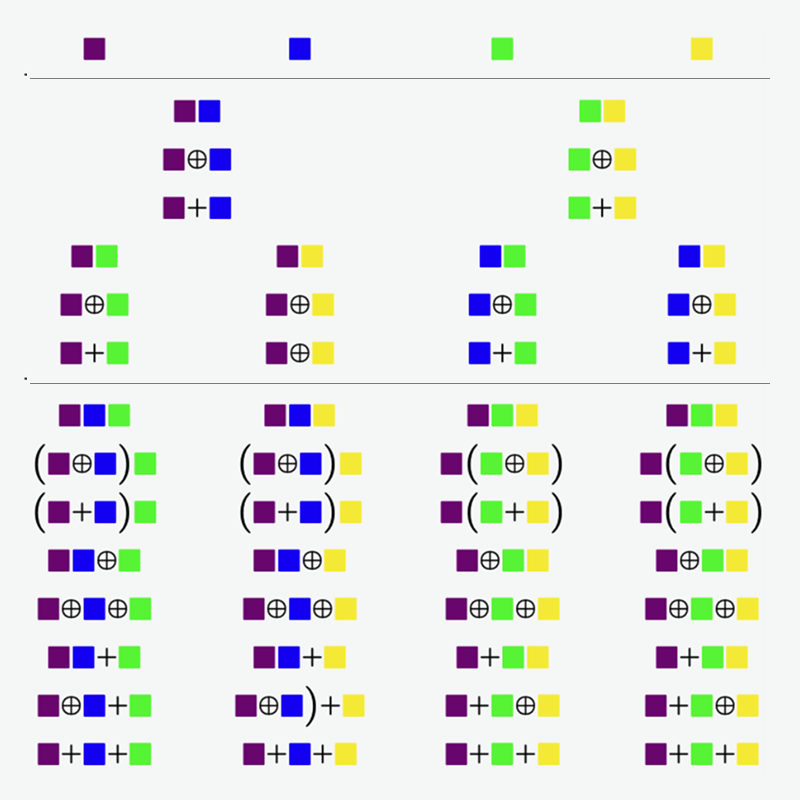

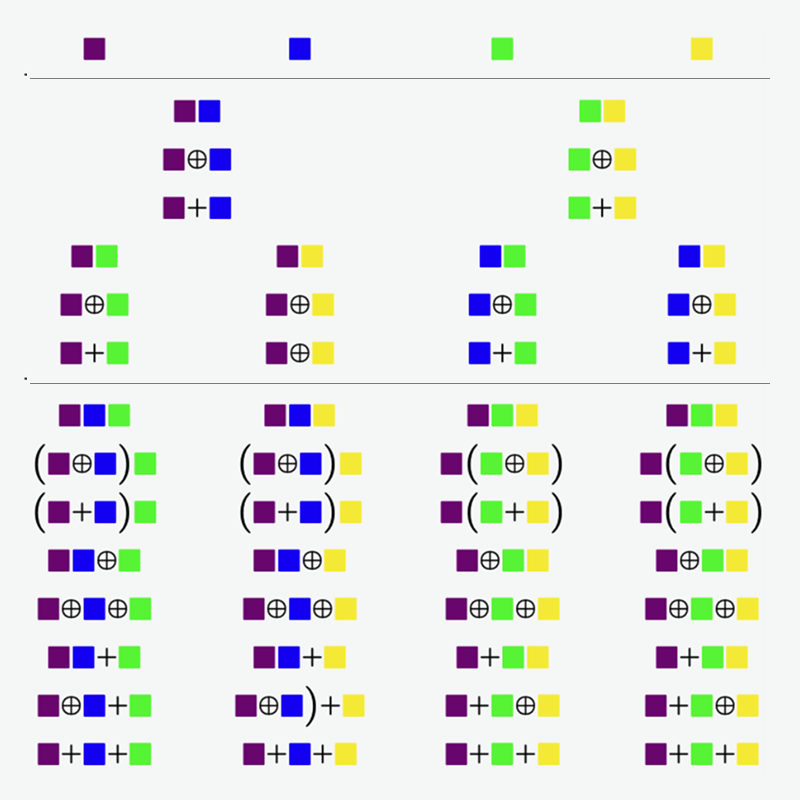

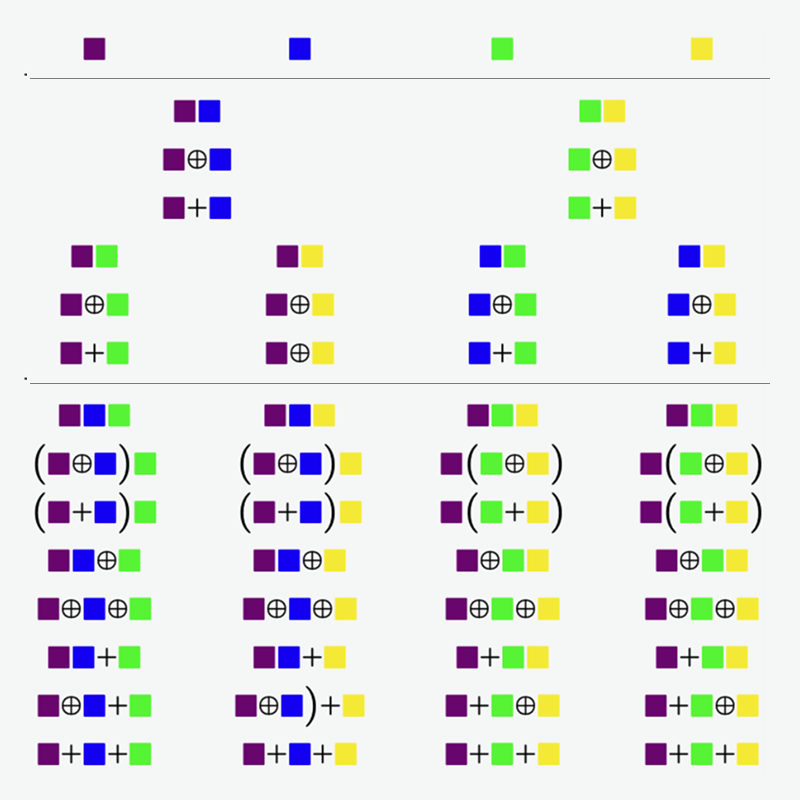

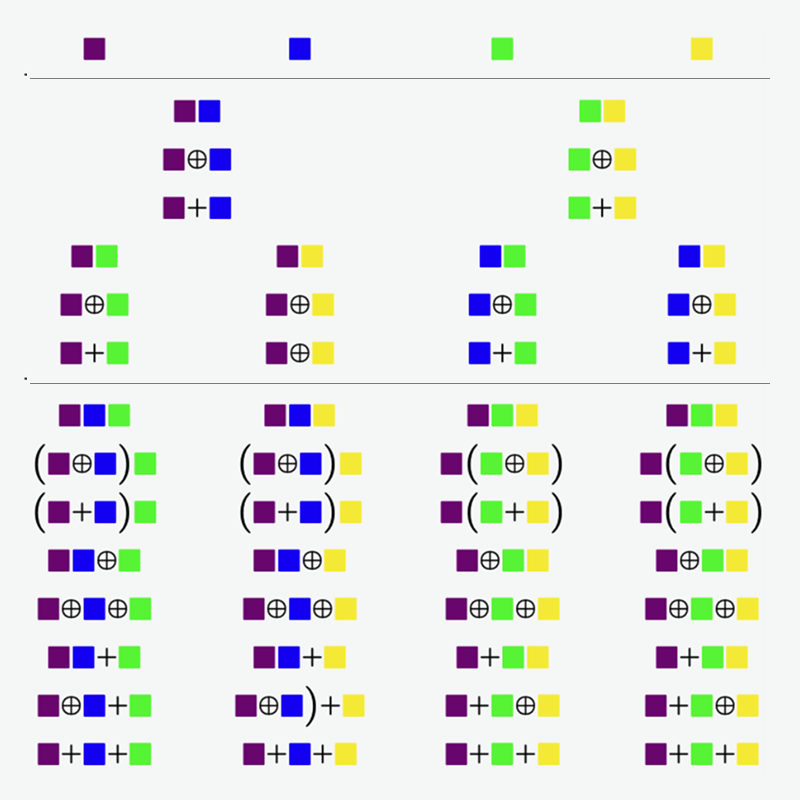

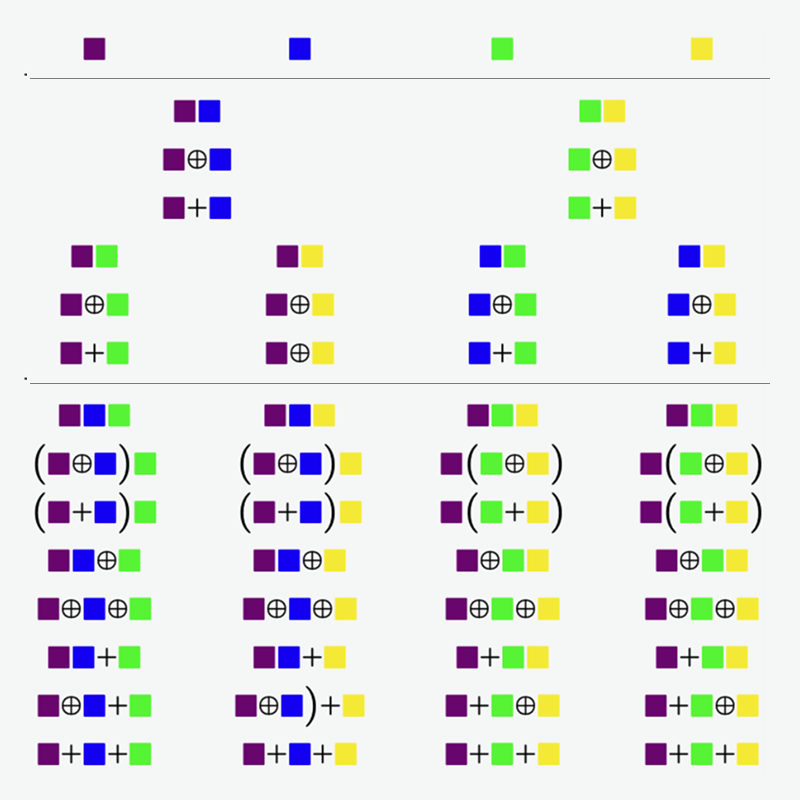

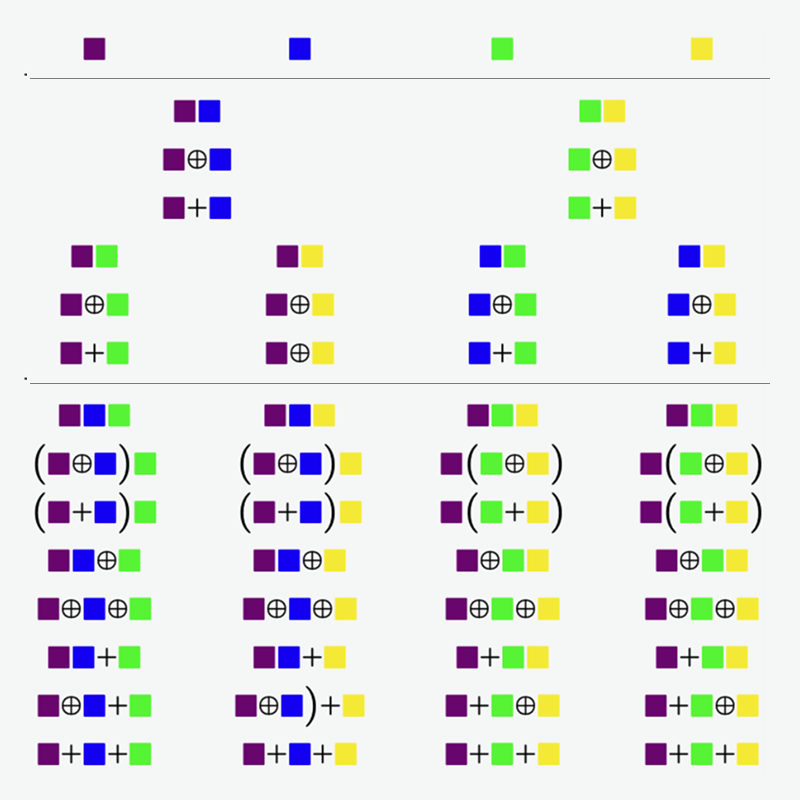

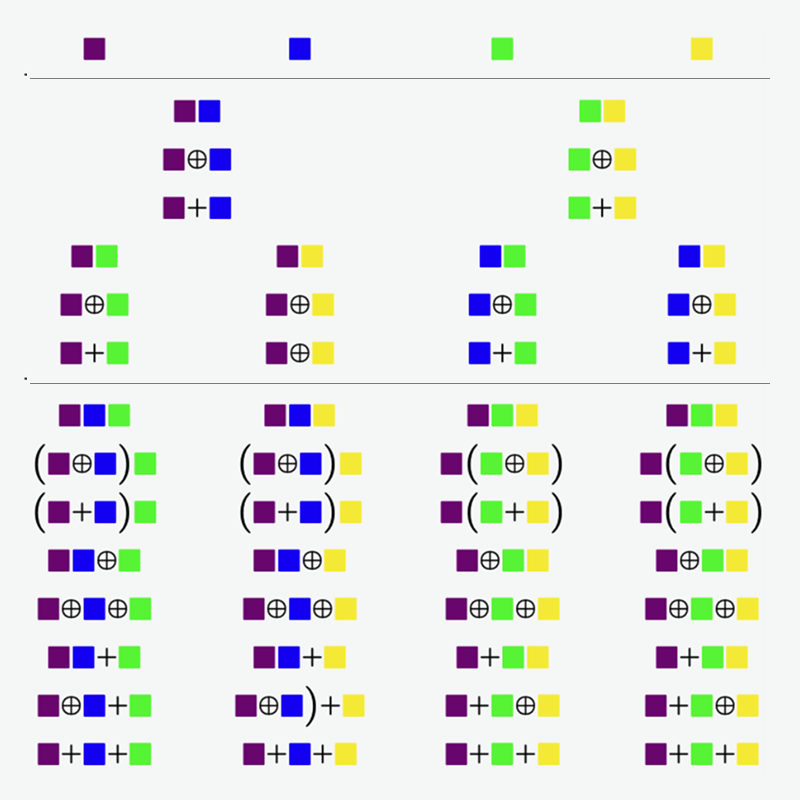

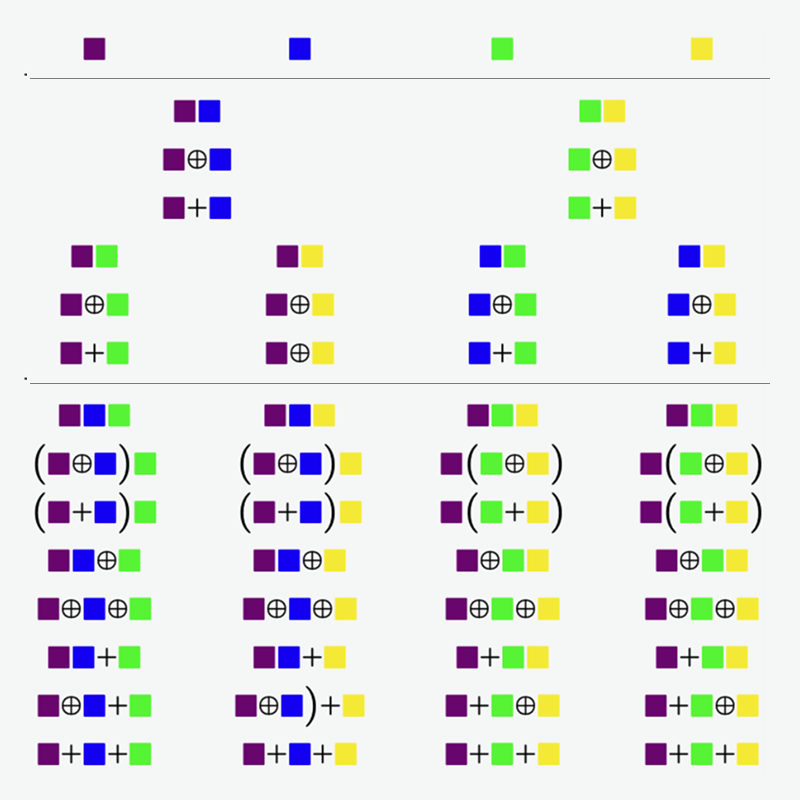

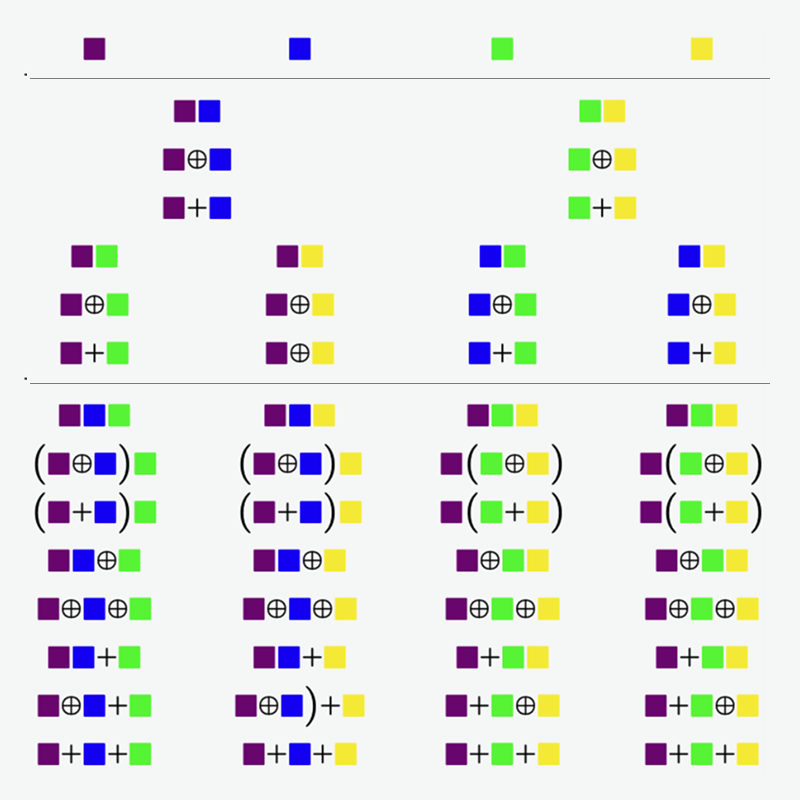

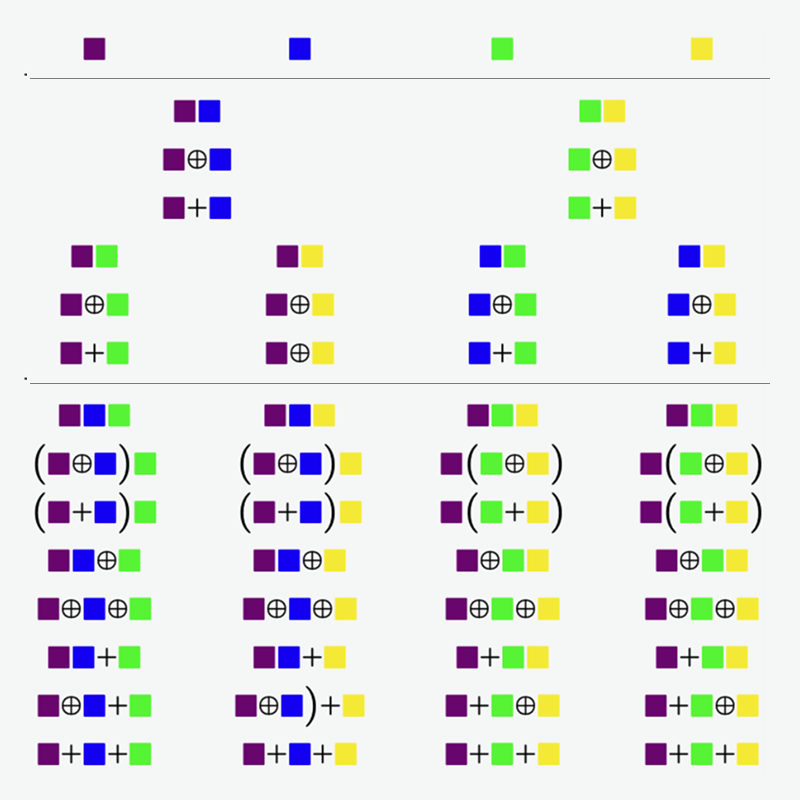

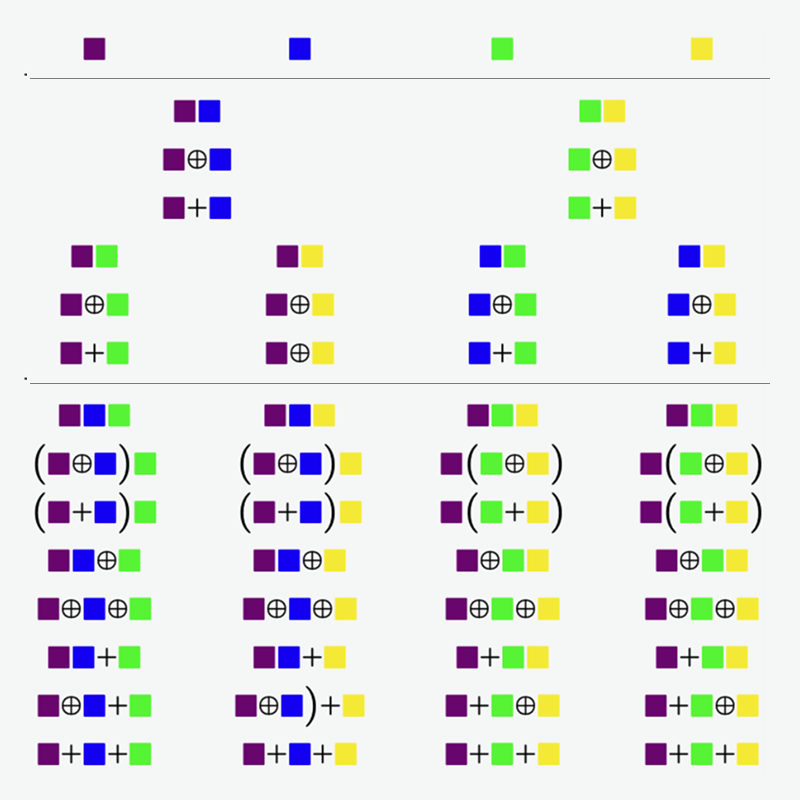

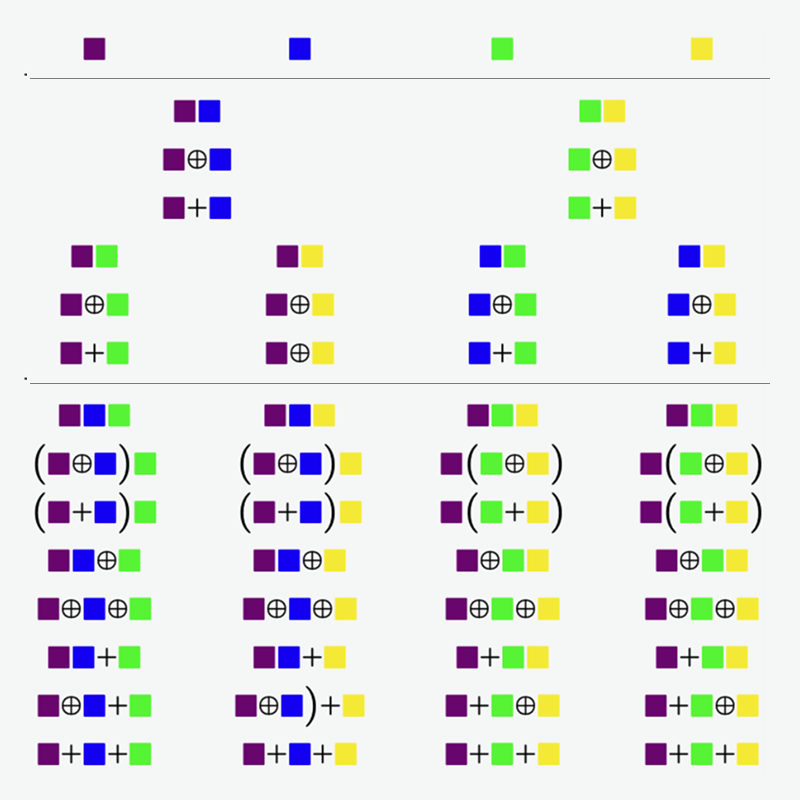

By analytically studying the repeated composition of simple Boolean functions, Dr Thomas Fink uncovers the spontaneous emergence of a law of parsimony. It is the first exact solution of a nontrivial input-output map, and suggests an explanation for why neural networks are biased towards simplicity in the models that they generate. The general result applies whenever composition of more than one input occurs.

Dr Thomas Fink is the founding Director of the London Institute and Charge de Recherche in the French CNRS. He studied physics at Caltech, Cambridge and École Normale Supérieure. His work includes statistical physics, combinatorics and the mathematics of evolvable systems.